There has been much discussion about ChatGPT recently, a chat robot that can write meaningful answers to questions. I haven’t had time to test it out properly, and it was unavailable when I wanted to check it today. Instead, I have played around with YouWrite, a service that can write text based on limited input.

I thought it would be interesting to ask it to write about something I know well, so I asked it to write a text based on an abbreviated version of the abstract of my new book:

A techno-cognitive look at how new technologies are shaping the future of musicking. “Musicking” encapsulates both the making of and perception of music, so it includes both active and passive forms of musical engagement. But at its core, it is a relationship between actions and sounds, between human bodies and musical instruments. Viewing musicking through this lens and drawing on music cognition and music technology, Sound Actions proposes a model for understanding differences between traditional acoustic “sound makers” and new electro-acoustic “music makers.” What is a musical instrument? How do new technologies change how we perform and perceive music? What happens when composers build instruments, performers write code, perceivers become producers, and instruments play themselves? The answers to these pivotal questions entail a meeting point between interactive music technology and embodied music cognition: “embodied music technology”.

From this, I generated three versions for different audiences.

Test 1 - A blog for students

I began by asking it to write a blog text in a “professional” tone targeted at “students”:

In the past, musical instruments were primarily acoustic. With the advent of new technologies such as digital audio workstations and computer programming languages, however, music makers have been able to create electronic sounds that can be manipulated in ways not previously possible with acoustic sound sources. This has opened up a world of possibilities for composers and performers alike: they are no longer limited by traditional physical constraints when creating or performing music.

The implications of this shift go beyond simply making it easier to produce certain types of sounds; technology is changing how we interact with music on a fundamental level. For instance, interactive systems allow us to control sonic parameters directly through body movements using motion capture devices like Kinects or Leap Motion controllers – thus allowing us to literally “play” our own bodies as if they were instruments themselves! Similarly, algorithmic composition techniques allow musicians and producers to generate entire pieces from scratch without ever having touched an instrument at all! These advancements in technology are enabling unprecedented levels of creativity among musicians today – something which was unimaginable just decades ago!

As students studying musicking in its various forms (both traditional and modern), it is important for us understand the impact these new technologies have had on both production-oriented practices (such as composing) and performance-oriented practices (such as playing). We must also consider how these changes affect our perceptionof what constitutes “music” itself — especially since many contemporary compositions blur boundaries between genres while simultaneously challenging conventional notions about what makes something musically meaningful or aesthetically pleasing. In short: taking a techno-cognitive approach towards understanding how new technologies shape future trends within musicking will help equip us with essential tools needed for navigating this rapidly evolving landscape!"

That is not bad at all. The text flows nicely, and it mostly makes sense. I don’t write much about digital audio workstations (DAWs) in my book, but it is a good connection. It also mentions specific and relevant technologies I don’t write about myself (Kinect and Leap Motion).

Test 2 - A blog for researchers

I was curious what would happen if I changed the target audience to “researcher”. I still kept the blog and “professional” tone settings:

As researchers, we are constantly looking for new ways to explore and understand the world around us. With the advent of digital audio workstations and computer programming languages, music makers now have access to a wide range of tools that allow them to create sounds in ways not previously possible with acoustic sound sources. This shift has had far-reaching implications not only for how composers can produce music but also how performers interact with it on a fundamental level.

For instance, interactive systems make use of motion capture devices such as Kinects or Leap Motion controllers so musicians can “play” their own bodies like instruments – allowing them unprecedented control over sonic parameters directly through body movements! Similarly, algorithmic composition techniques enable musicians to generate complex musical structures from simple inputs without having any prior knowledge about traditional musical theory or notation. These advances open up exciting possibilities for both research into music production and performance practices alike!

The potential applications of this technology extend beyond simply creating novel sounds; they offer an opportunity for us as researchers to gain insight into human behavior by studying how people interact with these technologies in different contexts – be it composing songs collaboratively online or performing live shows using motion sensors instead of conventional instruments. Ultimately, this shift towards electronic sound sources is enabling us all unlock new creative horizons while simultaneously advancing our understanding about ourselves and our relationship with art forms such as music!

I would have expected the content to change more, but it is quite similar to the first test. The main difference is the writing style. It is also interesting that each sentence differs from the first example.

Output 3 - a persuasive blog for professors

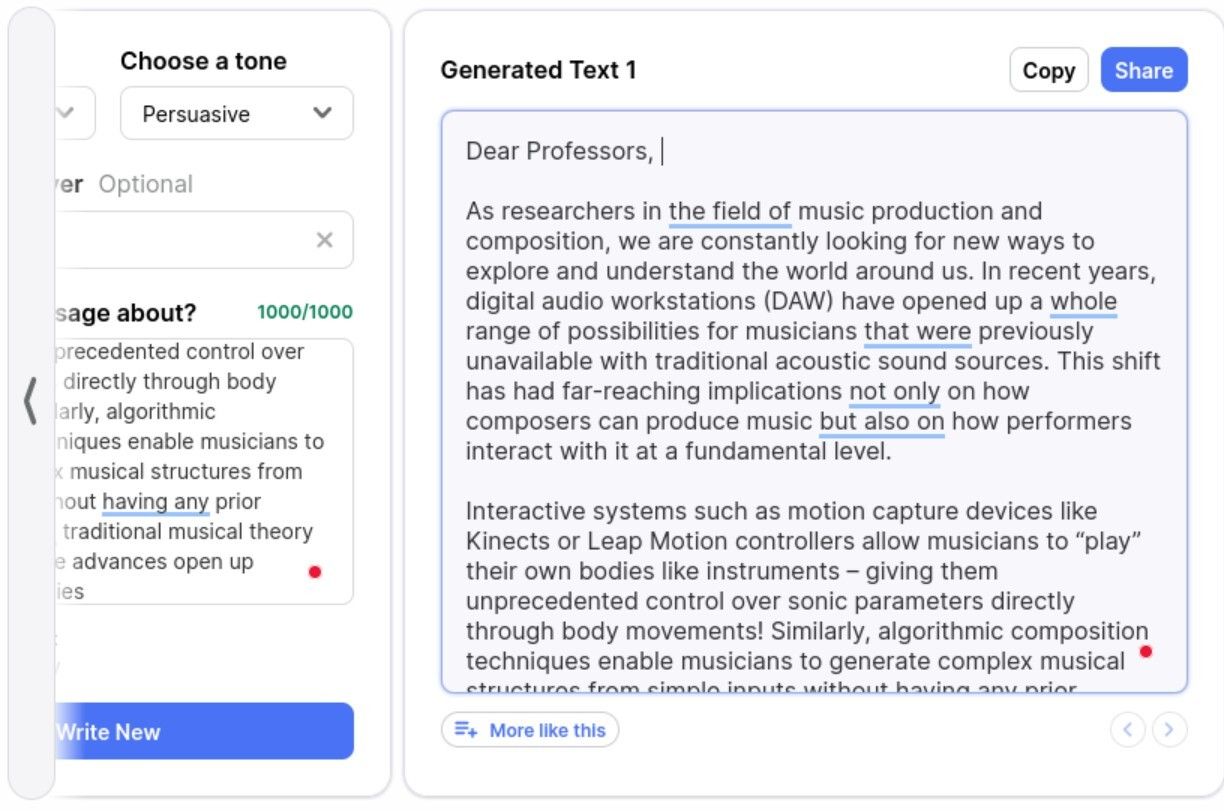

Finally, I changed to the “persuasive” setting and the target group to be “professors”:

Dear Professors,

As researchers in the field of music production and composition, we are constantly looking for new ways to explore and understand the world around us. In recent years, digital audio workstations (DAW) have opened up a whole range of possibilities for musicians that were previously unavailable with traditional acoustic sound sources. This shift has had far-reaching implications not only on how composers can produce music but also on how performers interact with it at a fundamental level.

Interactive systems such as motion capture devices like Kinects or Leap Motion controllers allow musicians to “play” their own bodies like instruments – giving them unprecedented control over sonic parameters directly through body movements! Similarly, algorithmic composition techniques enable musicians to generate complex musical structures from simple inputs without having any prior knowledge about traditional musical theory or notation. These advances open up exciting possibilities that could be explored further by our students if given access to these resources in our classrooms and studios.

By providing our students with access to this technology they will be able develop an understanding of interactive performance systems which may lead them down paths they never thought possible before! Furthermore allowing them hands-on experience working within these contexts provides valuable insight into creative processes as well as potential career opportunities outside academia should they choose pursue those avenues after graduation.

As educators it is important for us continue exploring new technologies so we can better prepare our students for success both inside and outside classroom walls - so let’s get started today!

Here, it broke with the three-paragraph style used for the first examples. Many of the sentences make sense, but it also exposes some flaws. In the two previous examples, it (correctly) talked about Kinects or Leap Motion controllers as input devices. Here, it talks about them as “interactive systems”, which does not make sense. Still, several other arguments show that it has “read” a lot of relevant music technology literature.

Conclusion

Dear professors, as university teachers, we should be aware that our students use these tools when writing their assignments and exams. It will be increasingly challenging to discover such usage since it will not appear in traditional plagiarism checkers. Carefully reading the texts will help discover logical flaws, but not all. One solution to this is to focus on school exams without tools or oral exams. Even better is to base more on evaluating what goes on in the classroom.

We will probably see an increased use of such tools for academic writing and application development. The quantity of written text will go up, but our time to read all of this will remain the same. We can counteract this by aiming at publishing less and focusing more on developing good open research approaches. Sharing data, methods, etc., is a good way of showing how you are working as a researcher, and it allows for verification, replicability, and reuse. And then, the AIs can write the final papers for us…