Last year, I wrote about video-based motion analysis of kayaking. Those videos were recorded with a GoPro Hero 8 and I tested some of the video visualization methods of the Musical Gestures Toolbox for Python. This summer I am testing out some 360 cameras for my upcoming AMBIENT project. I thought I should take one of these, a GoPro Max, out for some kayaking in the Oslo fjord. Here are some impressions of the trip (and recording).

Horizon leveling

I stumbled upon the feature “horizon leveling” by accident when going through the settings on the GoPro Max. The point is that it will stabilize the recorded image so that the horizon will always be leveled. I haven’t found any tech details about the feature, but assume that it uses a built-in gyroscope for the leveling. As it turns out, this feature also appears to be included on the GoPro Hero 9 and 10.

This feature works amazingly well, as can be seen from an excerpt of my kayaking adventure below:

Video visualizations

The Musical Gestures Toolbox for Python is in active development and I, therefore, thought it could be interesting to test some video visualization methods on the kayaking video. The whole video recording is 1.5 hours long, which is a good starting point for exploring some of the video visualization techniques in the toolbox. After all, one of the points of the toolbox was to develop solutions for visualizing long video recordings without scene changes.

My recordings of music or dance performances are similar to my kayaking videos in that they are based on continuous single-camera recordings. So I tested some of the basic visualization techniques.

A “keyframe” display based on sampling 9 images from the recording. These give “snapshots” of the scenery but don’t tell much about the motion.

A horizontal videogram of the whole recording (time running from left to right) shows more about what happened. Here you can really see that the horizon leveling worked flawlessly throughout. It is interesting to see the “ascending” lines at various intervals. These are due to the fact that I kayaked around an island, and kept turning right.

The vertical videogram shows the sideways motion. It is, perhaps, less informative than the horizontal videogram, but more beautiful. The yellow line in the middle is the kayak.

An average image of the whole recording blurs out all the details but leaves the essential information: the kayak, the fjord, and the horizon.

Audio analysis

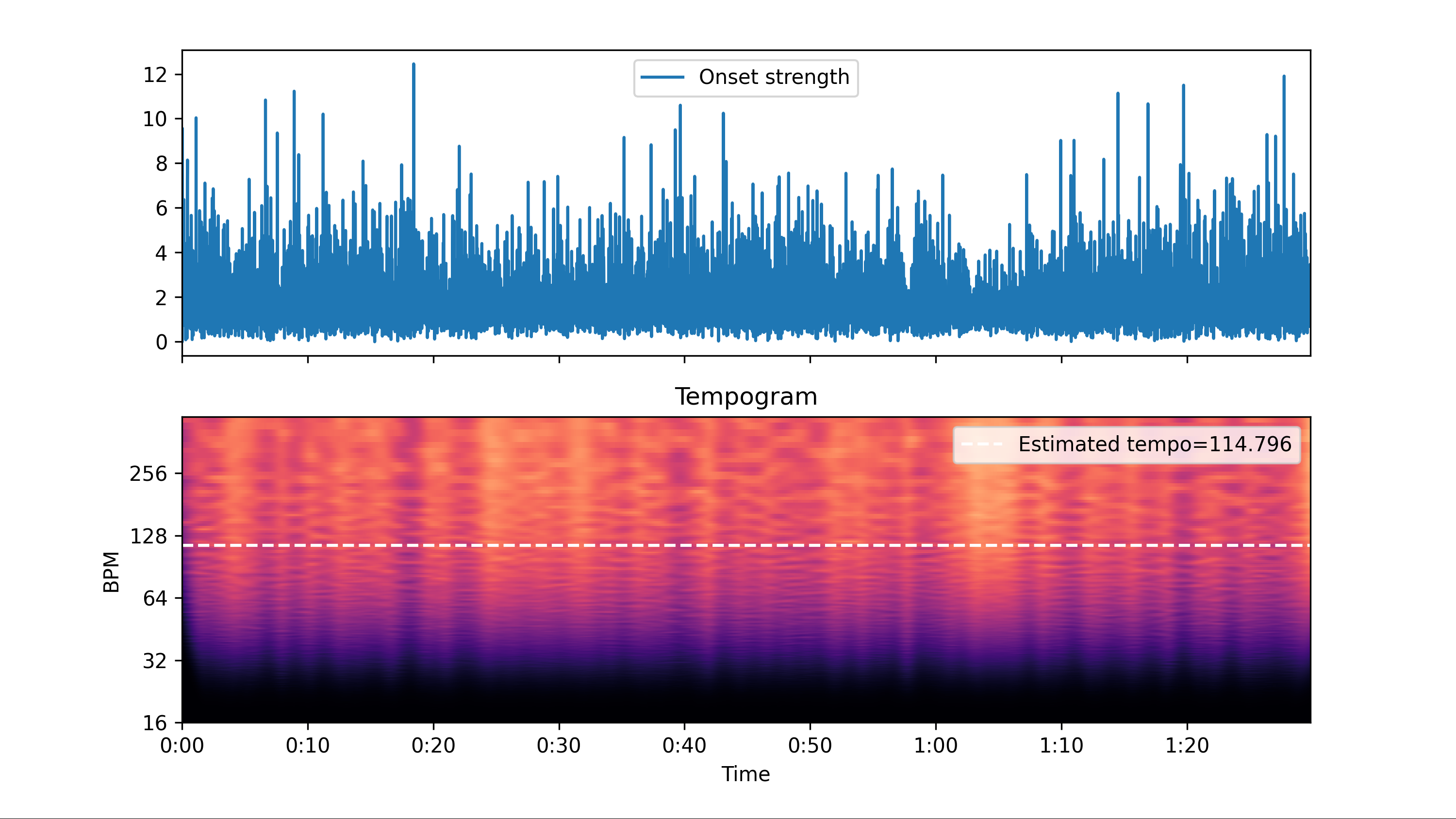

Kayaking is a rhythmic activity, so I was interested in also looking at whether I could find any patterns in the audio signal. For now, I have only calculated a tempogram, which estimates the tempo of my kayaking strokes to 114 BPM. I am not sure if that is good or bad (I am only a recreational kayaker) but will try to make some new recordings and compare.

Tempogram of the audio from the kayaking video. It is made from a resampled video file, hence the short duration (the original video is 1.5 hours long).

Solid toolbox

After testing the Musical Gestures Toolbox for Python more actively over the last few weeks, I see that we have now managed to get it to a state where it really works well. Most of the core functions are stable and they allow for combinations in various ways, just like a toolbox should. There are still some optimization issues to sort out, particularly when it comes to improving the creation of motion videos. But overall it is a highly usatile package.