At the NordicSMC conference last week, I was part of a panel discussing the topic Rigorous Empirical Evaluation of SMC Research. This was the original description of the session:

The goal of this session is to share, discuss, and appraise the topic of evaluation in the context of SMC research and development. Evaluation is a cornerstone of every scientific research domain, but is a complex subject in our context due to the interdisciplinary nature of SMC coupled with the subjectivity involved in assessing creative endeavours. As SMC research proliferates across the world, the relevance of robust, rigorous empirical evaluation is ever-increasing in the academic and industrial realms. The session will begin with presentations from representatives of NordicSMC member universities, followed by a more free-flowing discussion among these panel members, followed by audience involvement.

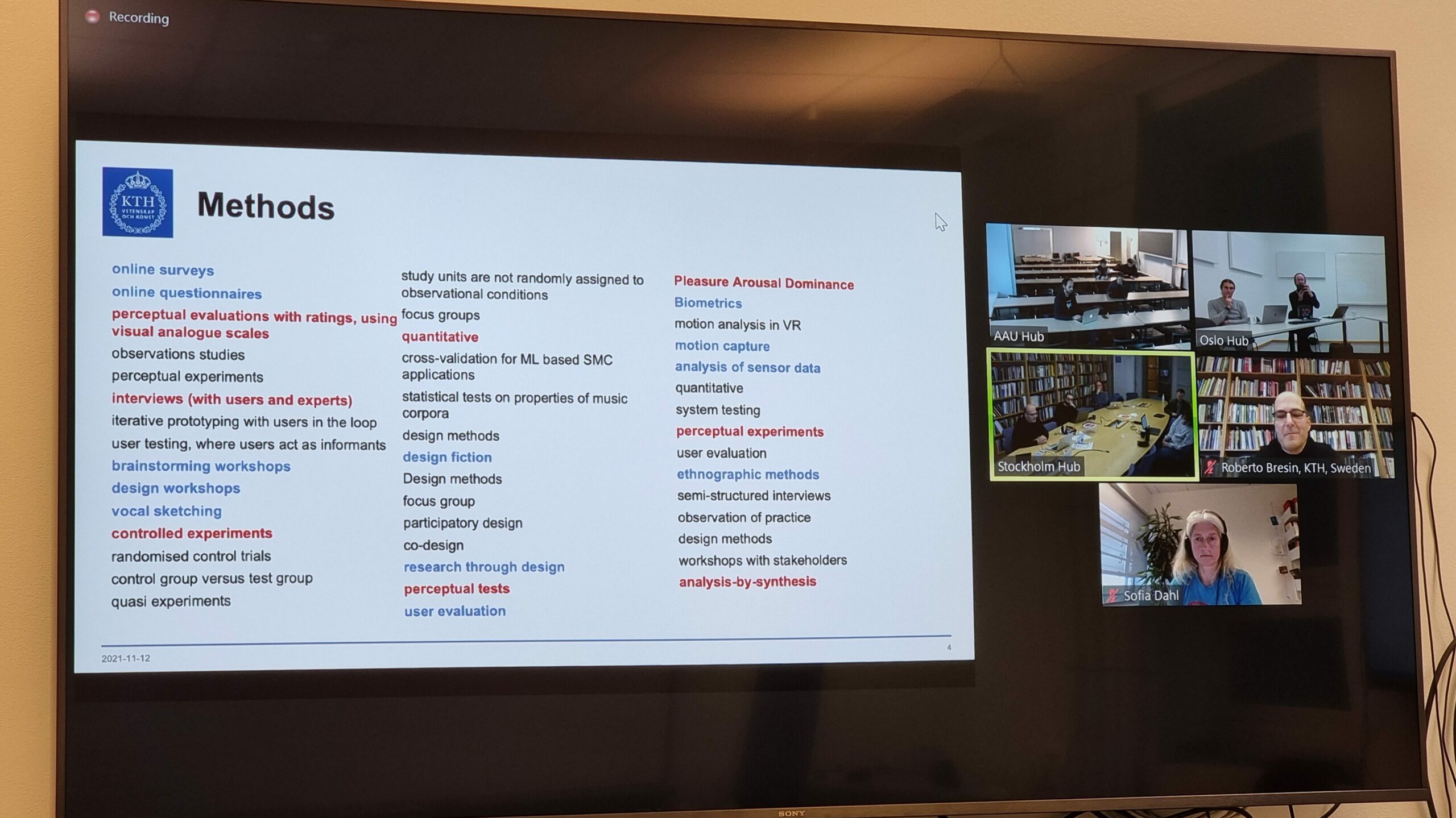

The discussion was moderated by Sofia Dahl (Aalborg University) and consisted of Nashmin Yeganeh (University of Iceland), Razvan Paisa (Aalborg University), and Roberto Bresin (KTH).

The challenge of interdisciplinarity

Everyone in the panel agreed that rigorous evaluation is important. The challenge is to figure out what type(s) of evaluation is useful and plausible within sound and music computing research. This was efficiently illustrated in a list of the different methods that are employed by the researchers at KTH.

Roberto Bresin had divided the KTH list into methods that they have been working with for decades (in red) and newer methods that they are currently exploring. The challenge is that each of these methods requires different knowledge and skills, and they all have different types of evaluation.

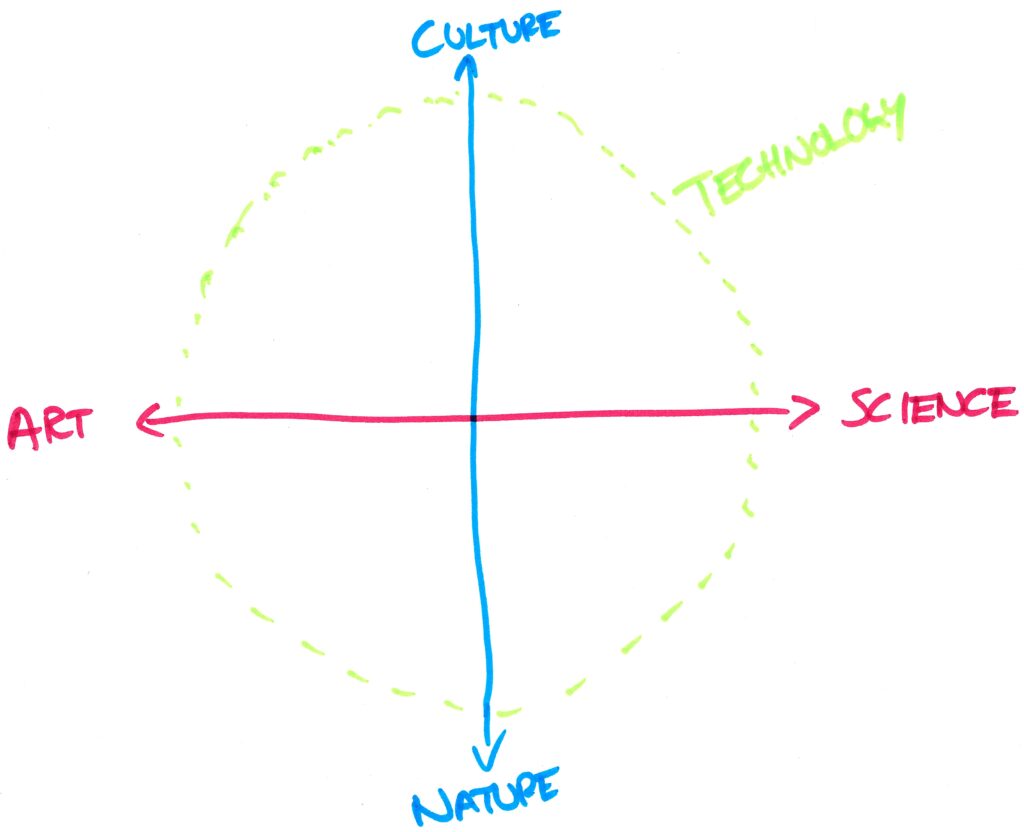

Although we have a slightly different research profile at UiO than at KTH, we also have a breadth of methodological approaches in SMC-related research. I pointed to a model I often use to explain what we are doing:

The model has two axes. One shows a continuum between artistic and scientific research methods and outputs. Another is a continuum between performing research on natural and cultural phenomena. In addition, we develop and use various types of technologies for all of these.

The reason I like to bring up this model is to explain that things are connected. I often hear that artistic and scientific research are completely different things. Sure, they are different, but there are also commonalities. Similarly, there is an often unnecessary divide between the humanities and the social and natural sciences. True, they have different foci but when studying music we need to take all of these into account. Music involves everything from “low-level” sensory phenomena to “high-level” emotional responses. One can focus on one or the other, but if we really want to understand musical experiences – or make new ones for that matter – we need to see the whole picture. Thus, evaluations of whatever we do also need to have a holistic approach.

Open Research as a Tool for Rigorous Evaluation

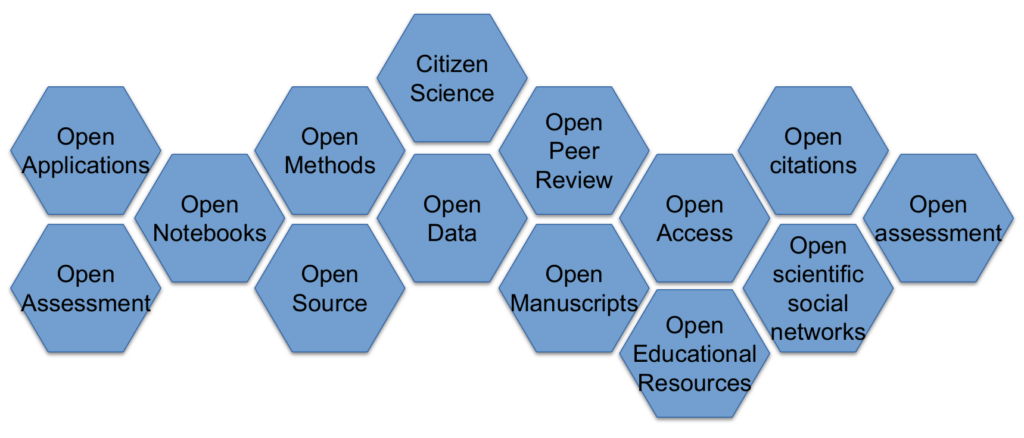

My entry into the panel discussion was that we should use the ongoing transition to Open Research practices as an opportunity to also perform more rigorous evaluations. I have previously argued why I believe open research is better research. The main argument is that sharing things (methods, code, data, publications, etc.) openly forces researchers to document everything better. Nobody wants to make sloppy things publicly available. So the process of making all the different parts of the open research puzzle openly available is a critical component of a rigorous evaluation.

In the process of making everything open, we realize, for example, that we need better tools and systems. We also experience that we need to think more carefully about privacy and copyright. That is also part of the evaluation process and lays the ground for other researchers to scrutinize what we are doing.

Summing up

One of the challenges of discussing rigorous evaluation in the “field” of sound and music computing is that we are not talking about one discipline with one method. Instead, we are talking about a set of approaches to developing and using computational methods for sound and music signals and experiences. If you need to read that sentence a couple of times, it is understandable. Yes, we are combining a lot of different things. And, yes, we are coming from different backgrounds: the arts and humanities, the social and natural sciences, and engineering. That is exactly what is cool about this community. But it is also why it is challenging to agree on what a rigorous evaluation should be!